So many questions, I’ll try to answer as many of them as I can now, and will answer to the rest later (as it’s nearly midnight here).

First of all, I must admit that when I was using L2P and L2P abbreviations, I could be misleading, as there’re 2 kind of L2P and P2L indexes:

- “proto indexes”, i.e. index.p2l and index.l2p files that exist until the transaction is fixed; AND

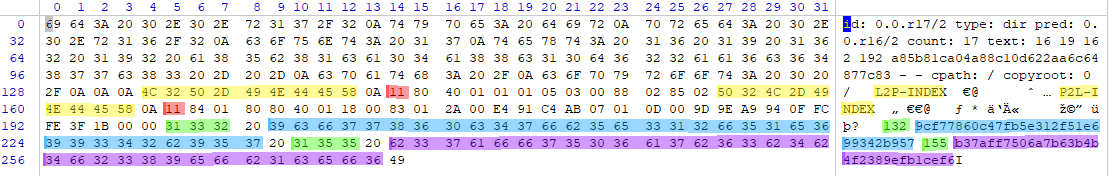

- L2P-INDEX and P2L-INDEX as the part of the footer of the “rev file”, let’s call them “rev indexes”.

They are now the same as you see from complexity of FSRoot#writeP2LIndex and FSRoot#writeL2PIndex; these methods convert “proto indexes” to “rev indexes”.

Mostly by P2L and L2P I meant “proto indexes”.

What about checksums, all these checksums: FNV-1a, MD5, SHA-1 are used in Subversion, but at different moments.

FNV-1a checksum type is “int”, i.e. it has 4 bytes. It is only used inside FSP2LEntry class. This FSP2LEntry class corresponds to P2L “proto index” (i.e. index.p2l) entry. Of course, the when the transaction is finished and the P2L “proto index” is converted to P2L “rev index”, the checksum goes to it as well.

By the way, to read “rev index”, FSLogicalAddressingIndex class is used, e.g. FSLogicalAddressingIndex#lookupP2LEntries returns List<FSP2LEntry>.

To write P2L “proto index”, FSP2LProtoIndex file is used.

There’s no other place where FNV-1a checksum is used.

The “rev indexes” have their own checksums, indeed, you’re right at this moment. You can see that from FSRoot#writeIndexData:

final long l2pOffset = protoFile.getPosition();

final String l2pChecksum = writeL2PIndex(protoFile, newRevision, txnId);

final long p2lOffset = protoFile.getPosition();

final String p2lChecksum = writeP2LIndex(protoFile, newRevision, txnId);

These checksums are MD5.

If you look inside FSRoot#writeP2LIndex (for FSRoot#writeP2LIndex this is also true), you’ll see

final SVNChecksumOutputStream checksumOutputStream = new SVNChecksumOutputStream(protoFile, SVNChecksumOutputStream.MD5_ALGORITHM, false);

i.e. the MD5-generating output stream is created for the current position of the “proto file” and then P2L “rev index” is written to it, starting with “P2L-INDEX\n” header (FSLogicalAddressingIndex.P2L_STREAM_PREFIX constant). So the checksum is just MD5 of the P2L “rev index”.

Once the checksums are calculated the footer is formed like the following (FSRoot#writeIndexData):

final StringBuilder footerBuilder = new StringBuilder();

footerBuilder.append(l2pOffset);

footerBuilder.append(' ');

footerBuilder.append(l2pChecksum);

footerBuilder.append(' ');

footerBuilder.append(p2lOffset);

footerBuilder.append(' ');

footerBuilder.append(p2lChecksum);

final String footerString = footerBuilder.toString();

and then the footer length is also written.

Also MD5 and SHA-1 are used in representations: FSRepresentation#myMD5HexDigest and FSRepresentation#mySHA1HexDigest. If representation represents a file, they equal to the MD5 and SHA-1 of the content of the file. In contrast when FNV-1a was calculated, it was calculated for representation encoded in the way it is stored in the “rev file”. E.g. if I have a file with content “abc\n”, its MD5 and SHA-1 are:

$ md5sum file

0bee89b07a248e27c83fc3d5951213c1 file

$ sha1sum file

03cfd743661f07975fa2f1220c5194cbaff48451 file

and if I look inside the “rev file”, it starts with

00000000 44 45 4c 54 41 0a 53 56 4e 02 00 00 04 02 05 01 |DELTA.SVN.......|

00000010 84 04 61 62 63 0a 45 4e 44 52 45 50 0a 69 64 3a |..abc.ENDREP.id:|

00000020 20 30 2d 31 2e 30 2e 72 31 2f 34 0a 74 79 70 65 | 0-1.0.r1/4.type|

00000030 3a 20 66 69 6c 65 0a 63 6f 75 6e 74 3a 20 30 0a |: file.count: 0.|

00000040 74 65 78 74 3a 20 31 20 33 20 31 36 20 34 20 30 |text: 1 3 16 4 0|

00000050 62 65 65 38 39 62 30 37 61 32 34 38 65 32 37 63 |bee89b07a248e27c|

00000060 38 33 66 63 33 64 35 39 35 31 32 31 33 63 31 20 |83fc3d5951213c1 |

00000070 30 33 63 66 64 37 34 33 36 36 31 66 30 37 39 37 |03cfd743661f0797|

00000080 35 66 61 32 66 31 32 32 30 63 35 31 39 34 63 62 |5fa2f1220c5194cb|

00000090 61 66 66 34 38 34 35 31 20 30 2d 30 2f 5f 32 0a |aff48451 0-0/_2.|

...

as you see, it contains both “0bee…” and “03cfd…” hashes as strings. But FNV-1a is probably calculated of … I’m not sure of which part of this file, but of some part of this “rev file”, not of just “abc”.

Ok, that’s all for today, to be continued.

By the way, you could be also interested in reading about “layout sharded” feature (preventing too many files in “db/revs” directory), otherwise for some large sharded repositories your code might fail.